A few days ago, Amazon made a big announcement: the service Bedrock was finally released.

If you missed the news, here it is: Amazon decided to enter massively into the generative AI field and invested in the company Anthropic for $4 billion in order to compete with popular AI services like ChatGPT, Dall-E, MidJourney, etc. The first proposal of Amazon is Bedrock, a fully managed AI service that offers multiple foundational models such as Claude, Jurassic, and Stable Diffusion XL, among others.

In my previous articles, I created Unity3D applications communicating with ChatGPT and Dall-E. Let’s do the same with Bedrock!

Do you prefer something more interactive instead of reading? Check my video!

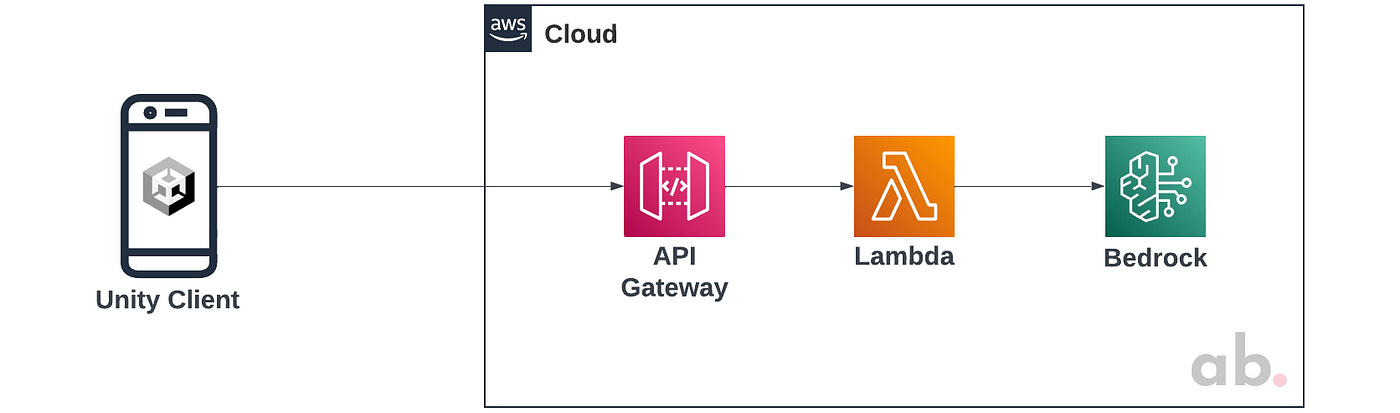

General Architecture

Here is the general architecture of our application:

We use 3 Amazon services:

- API Gateway to create, expose, and consume endpoints.

- Lambda to receive the client’s requests and communicate with Bedrock.

- Bedrock to generate answers based on the client’s prompts.

If you want to get further adding a login mechanism with Cognito, please refer to my previous article:

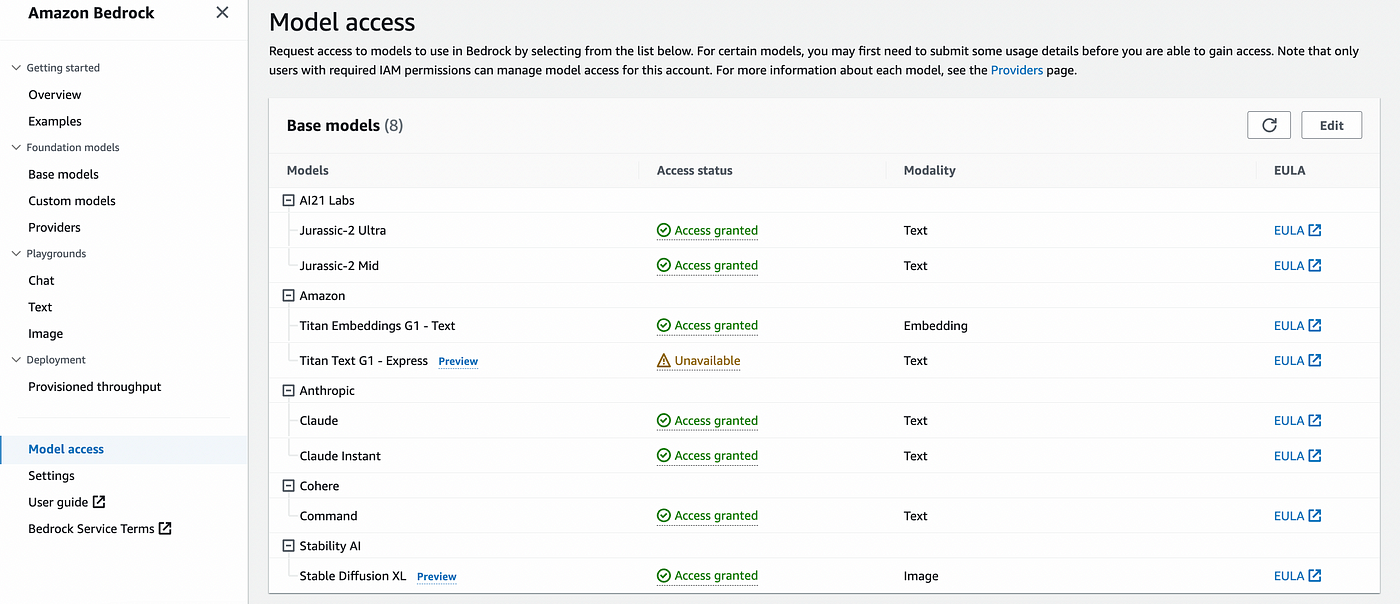

Bedrock

First, we have to enable the foundational models we want to use. By default, all the models are disabled. Go to the Bedrock console and enable the models you will use in the model access section. If you have an updated payment method, activating the models only takes a few minutes.

If you don’t enable the models in the Bedrock console, you will receive this error in Lambda:

An error occurred (AccessDeniedException) when calling the InvokeModel operation: Your account is not authorized to invoke this API operation.

Lambda, Boto3 and Bedrock

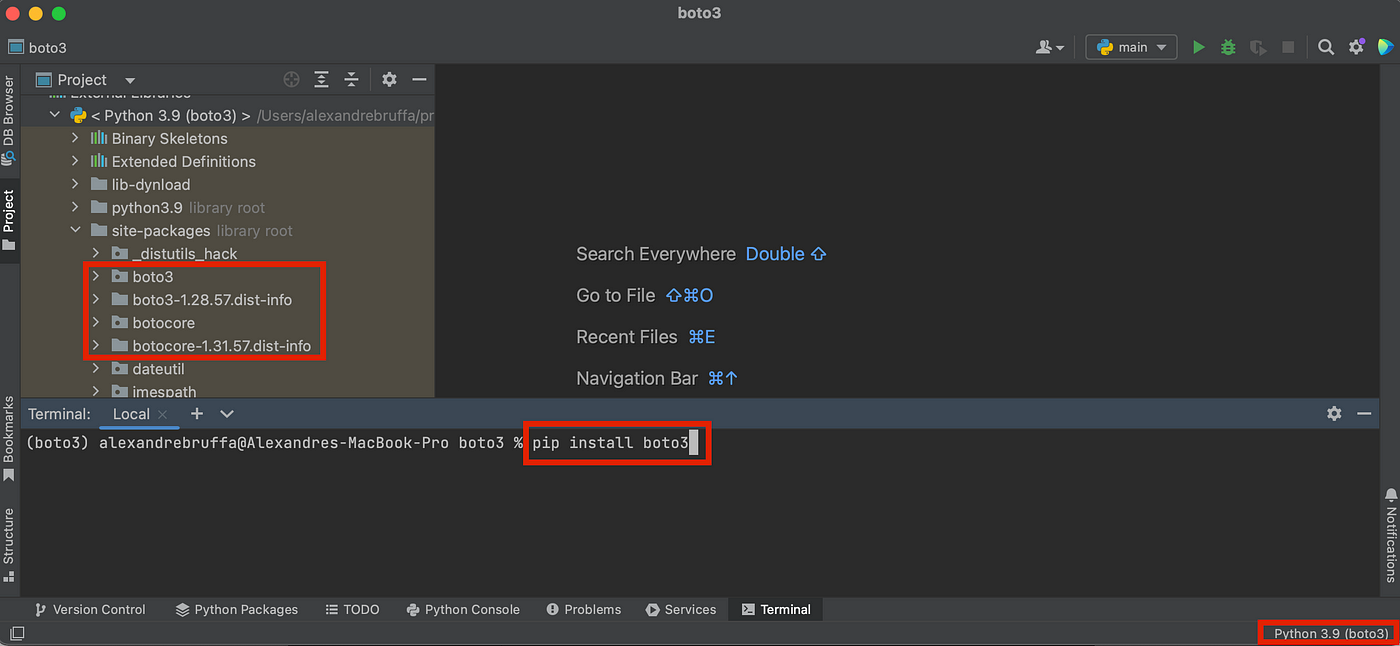

If you follow my work, you know I’m a Python guy, so I will use the Python SDK for AWS, Boto3. Good news: Bedrock is available on Boto3; bad news: Lambda runs an old version of Boto3 in which Bedrock does not exist yet.

💡 Here is a workaround: creating a new Lambda layer with an up-to-date version of Boto3. This is how to do it:

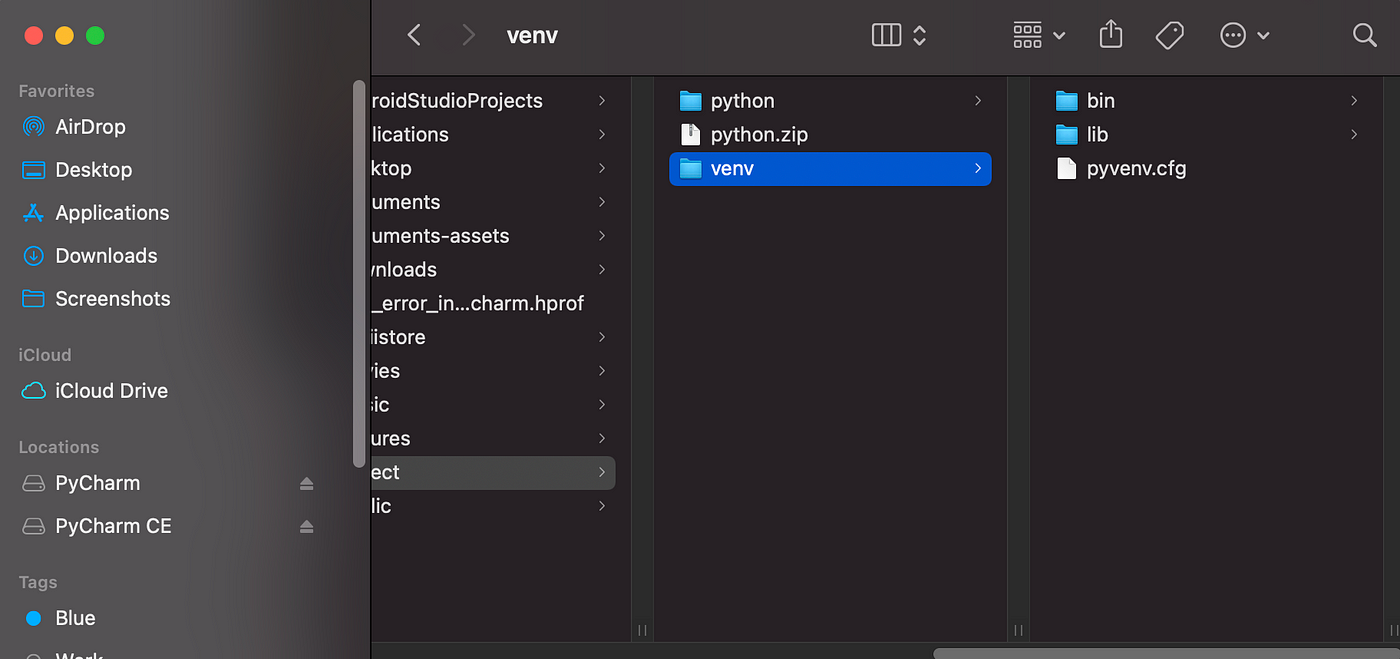

- In PyCharm, create a new project and install boto3 in a new virtual environment. Boto3 and all the dependencies will be installed.

2. Go to the venv folder of your project and copy the lib folder into a new folder called python. Then, zip it.

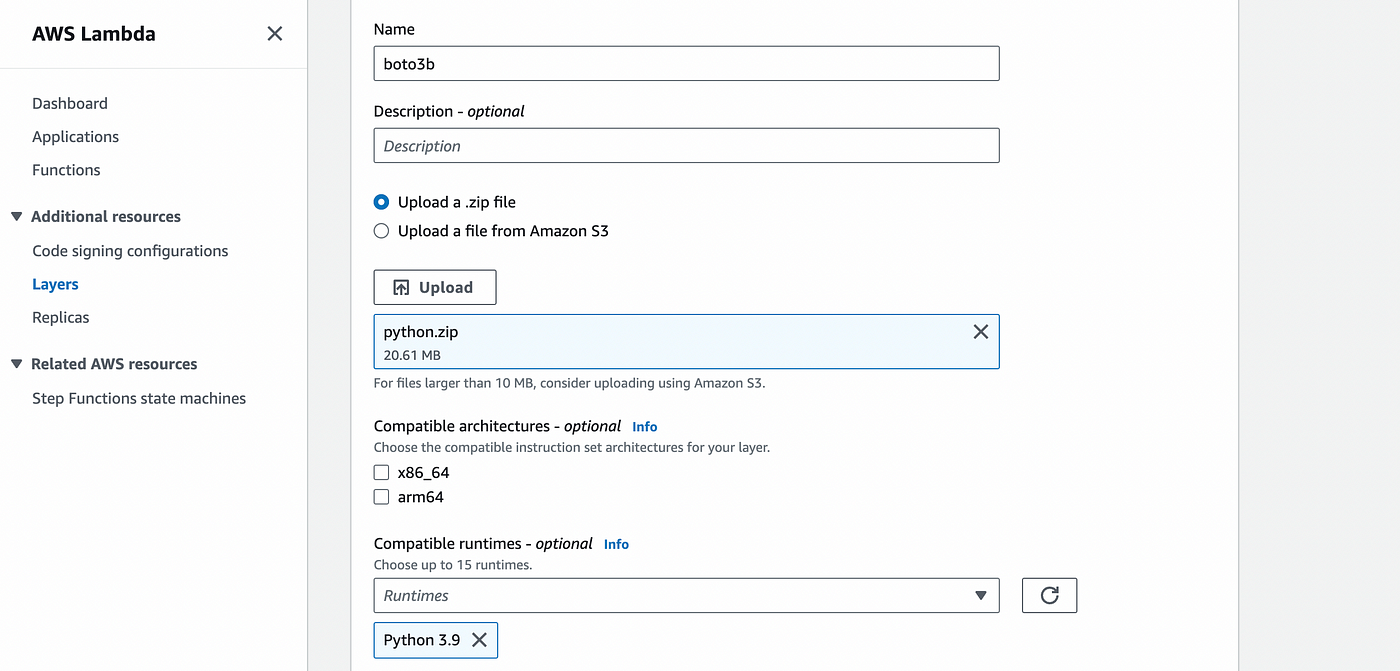

3. Go to the Layers section of the Lambda console and create a new layer. Upload the zip file we have created before and choose the same Python version as your local Python virtual environment for the runtime.

The Lambda Functions

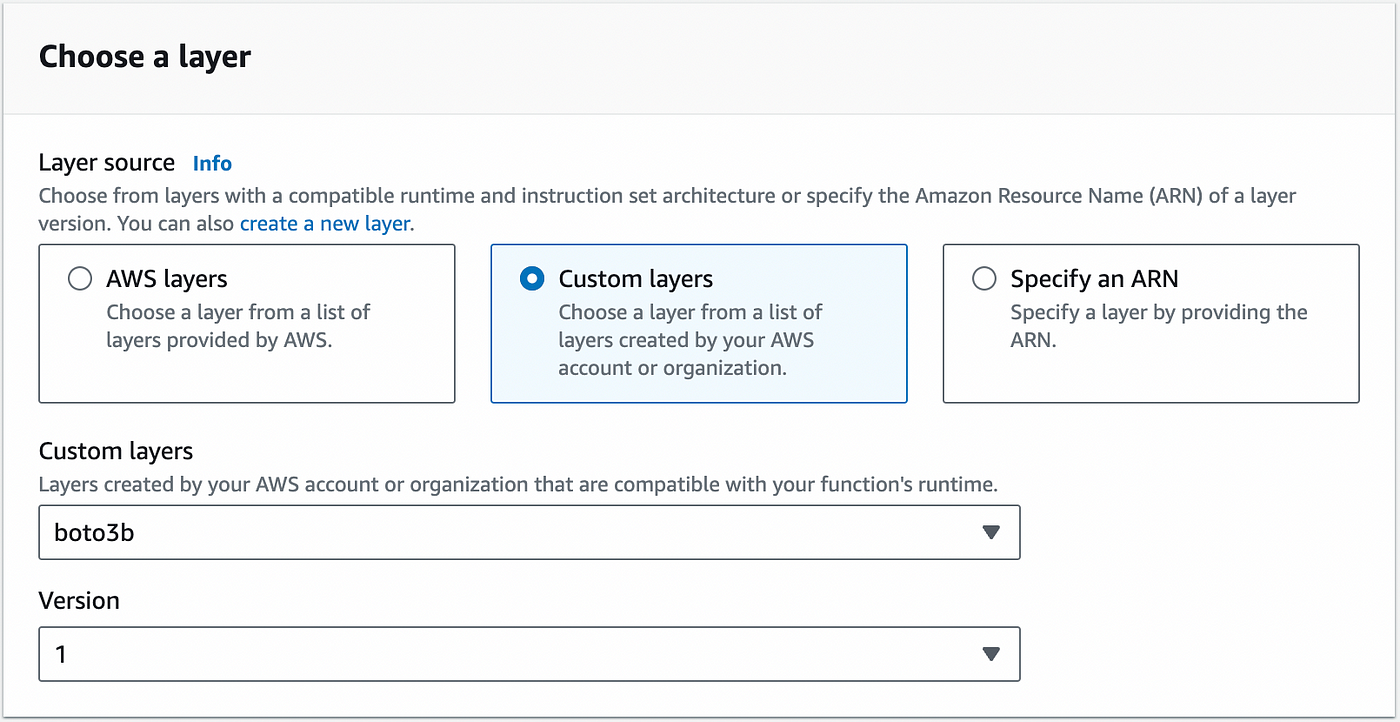

We create 2 Lambda functions, one for text generation and the other one for image generation, both with the same version as previously for the runtime (In my case, Python 3.9), and we add the layer we have created before.

The text generation function

For the text generation, I use the Claude model and implement it as described in the Amazon documentation.

The image generation function

For the image generation, I use the Stable Diffusion XL model and implement it, as described in the Amazon documentation.

Note that the model IDs can be found in the Bedrock documentation.

The Lambda Policy

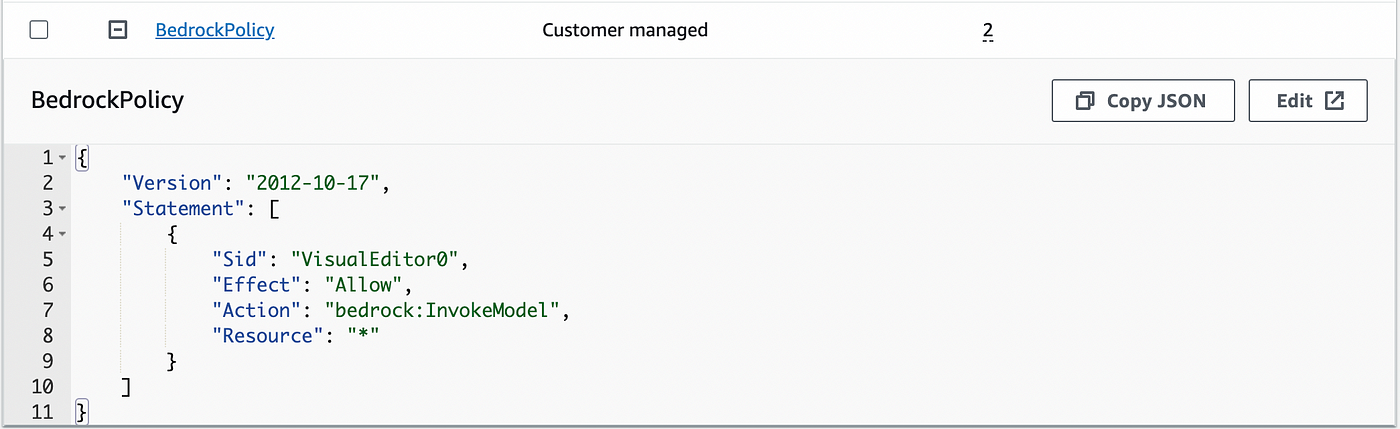

In order to allow Lambda to invoke a Bedrock model, we create a new managed policy and add it to the Lambda functions roles:

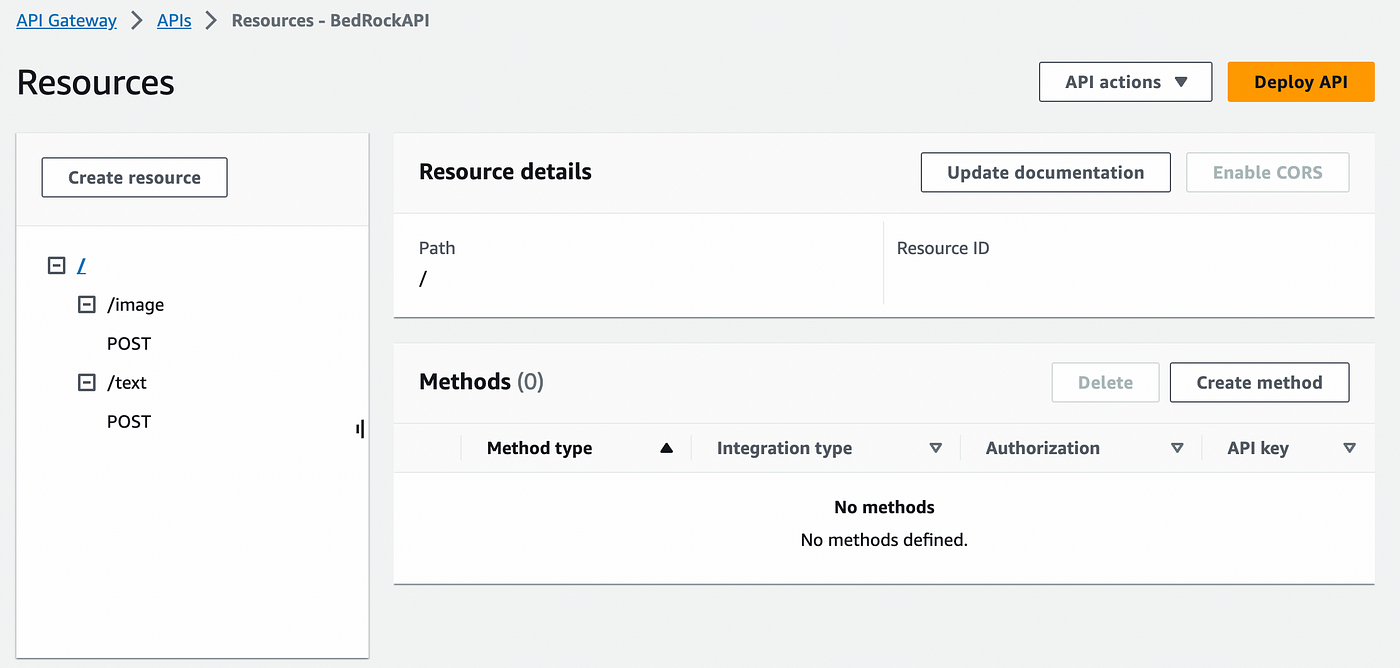

API Gateway

In the API Gateway console, we create a new REST API with 2 resources: image and text. Each resource has a post method integrating with the Lambda functions we have created before.

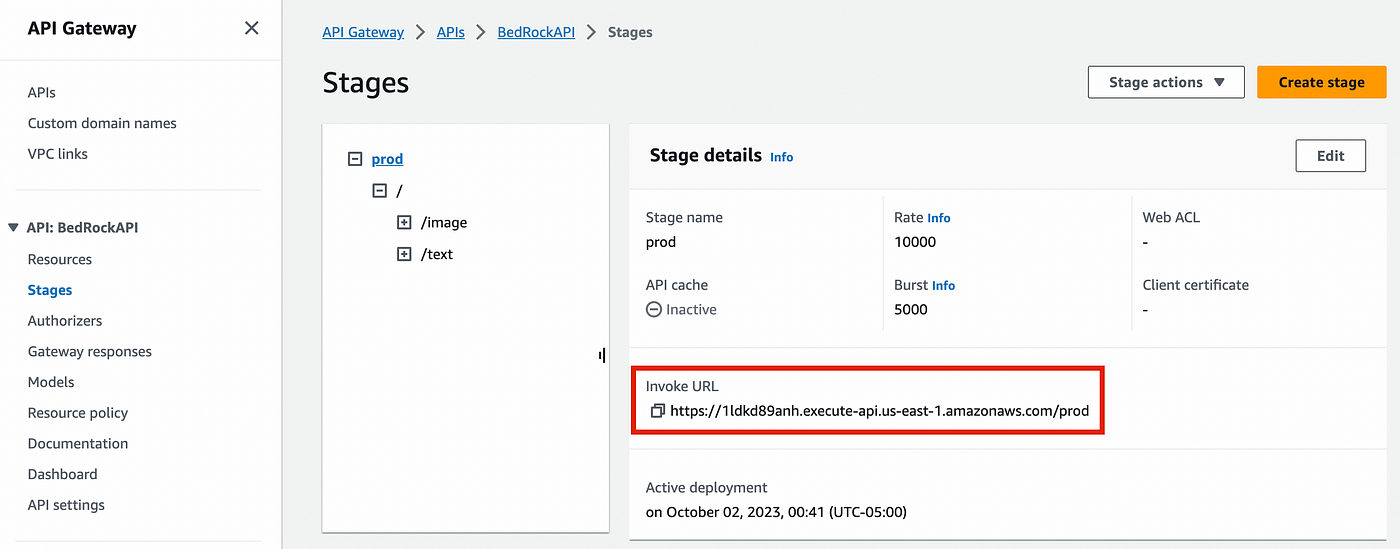

When deploying the API, you will be asked to create a new stage. After the creation of the stage, the final endpoint will be shown. You will have to use this URL in the Unity client to perform the request.

The Unity Client

the Unity client allows the user to send a message to generate a text or an image as an answer:

Handling the request

First, we need to create serializable classes to embed the sending and receiving data:

Sending a request in Unity is an asynchronous process. Therefore, we create a coroutine that sends the request to the server:

Dealing with the generated image

If we ask for an image generation, the result sent by Bedrock is a base64 image. To show it on the Unity UI, we must convert it into bytes and create a Texture2D. Then, we assign the texture to the RawImage component of the UI.

Result

Here is the result with text and image generations:

Costs

Let’s check with the AWS Calculator and the Bedrock pricing sheet what our system cost would be for a very pessimistic scenario: you love the app, and you perform 2,000 requests a month. 1,000 for text generation with the Claude model and 1,000 for image generation with Stable Diffusion XL.

- API Gateway: With 2,000 requests to our REST API, the monthly cost is 0,01 USD

- Lambda: With 2,000 requests with an average time of 5 seconds and 512 MB of memory allocation, the monthly cost is 0.08 USD.

- Bedrock: 1,000 text generations by month with a maximum of 300 output tokens and 20 input tokens per request would result, in the worst case, in $0.2204 + $9.804. 1,000 image generations would give us a bill of $18.

Total: The total bill for our system would be approximately 28 USD monthly. It’s quite affordable!

Final Thoughts

Using Bedrock is very intuitive, and integrating it with other Amazon services is absolutely straightforward. The fact that Bedrock is an in-house product makes the integration easier: there is no need for API keys or external requests to make it work, resulting in greater performance and security.

Every code of this article has been tested using Unity 2021.3.3 and Visual Studio Community 2022 for Mac. The mobile device I used to run the Unity app is a Galaxy Tab A7 Lite with Android 11.

Thanks for reading until the end!

Resources

Here is the resource mentioned in the article:

- The Unity package of the application

- The text generation function in Python

- The image generation function in Python